1. Flannel

Flannel 是 Kubernetes 生态系统中最早、最经典的容器网络插件(CNI) 之一,由 CoreOS 团队开发。它的核心目标是为一个集群中的所有 Pod 提供一个统一的、三层(IP层)的虚拟网络,使得不同节点上的 Pod 之间能够直接通过 IP 地址进行通信。Flannel 有多种模式,常用的主要有两种,VXLAN 以及 host-gw。

1.1. VXLAN 模式

工作原理:

- 采用 VXLAN 模式实现的虚拟大二层网络,它的物理网络可以二层连通也可以三层连通。

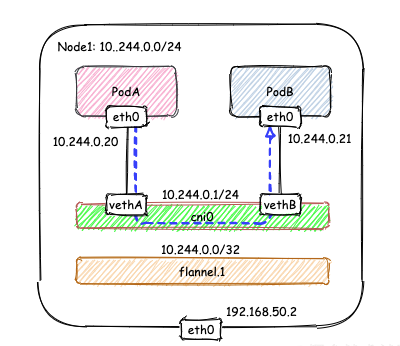

- VXLAN 是 Flannel 默认和推荐的模式。当我们使用默认配置安装 Flannel 时,它会为每个物理节点分配一个24位子网,该物理节点上的 Pod 就使用该网段,每个物理节点分配的段都不一样(例如物理节点1的网络为10.244.0.0/24,物理节点2的网络为10.244.1.0/24),并在每个节点上创建两张虚机网卡:cni0 和 flannel.1。

- cni0 是一个网桥设备,类似于 docker0 ,节点上所有的 Pod 都通过 veth pair的形式与 cni0 相连。

# 进入容器内,查看容器内eth0网卡关联宿主机veth对的id号

cat /sys/class/net/eth0/iflink # 如果结果为61

# 那么去宿主机上,执行

ip link show # 可以找到一个编号为61的veth对- flannel.1 则是一个 VXLAN 类型的设备,充当 VTEP 的角色,实现对 VXLAN 报文的封包解包。.1 后缀代表封包的 vni id 为1,这表示 Flannel 的 VXLAN 模式下所有 pod 都在一个二层网络里。

节点内通信:

节点内的容器间通信通过 cni0 网桥就能完成,不涉及任何 VXLAN 报文的封包解包。例如图例中,Node1的子网为10.244.0.1/24, PodA 10.244.0.20 和 PodB 10.224.0.21通过 cni0 网桥实现互通。

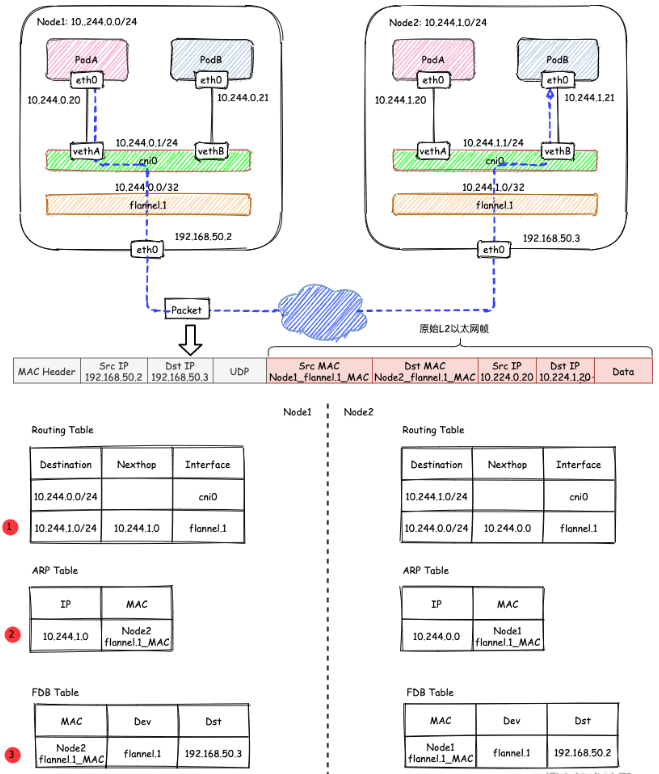

跨节点通信:

- 发送端:在 PodA 中发起 ping 10.244.1.21,ICMP 报文经过 cni0 网桥后交由 flannel.1 设备处理。flannel.1 设备是一个 VXLAN 类型的设备,负责 VXLAN 封包解包。因此,在发送端,flannel.1 将原始 L2 报文封装成 VXLAN UDP 报文,然后从物理网卡 eth0 发送。

- 接收端:Node2 收到 UDP 报文,发现是一个 VXLAN 类型报文,交由 flannel.1 进行解包。根据解包后得到的原始报文中的目的 IP,将原始报文经由 cni0 网桥发送给 PodB。

flanneld 从 etcd 中可以获取所有节点的子网情况,以此为依据为各节点配置路由,将属于非本节点的子网IP都路由到 flannel.1 处理,本节点的子网路由到 cni0 网桥处理。如果节点信息有变化, flanneld 也会同步的对路由信息做修改。

Flannel VXLAN 模式总结:

Flannel 的 VXLAN 模式的核心为三张表,由 flanneld 进程维护,从 etcd 获取所有pod 网络信息写入这三张表中。

- 路由表:

(route -n)- 本地网段,交给 cni0。

- 其他网段交给 flannel.1。

- arp 表:

(ip n)- 找到去往目标网段对应的 flannel.1设备的 mac 地址。

- fdb 表:

(bridge fdb show)- 根据 flannel.1 的 mac 地址定位到他在哪台物理机的 ip 地址。

VXLAN 模式的优缺点:

- 优点:物理网络可以二层也可以是三层,通用性强。

- 缺点:集群规模大时,转发效率较低。

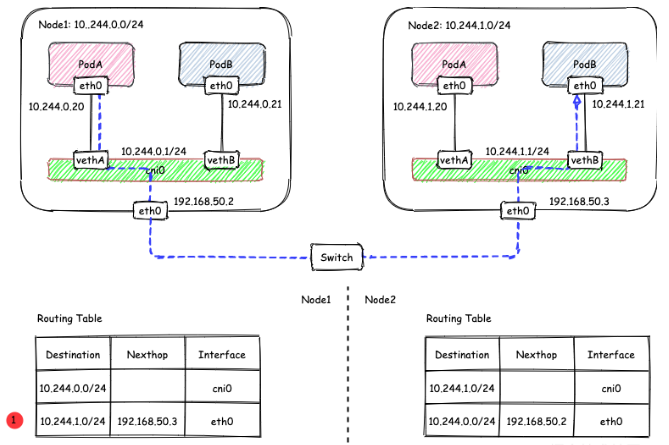

1.2. host-gw 模式

host-gw 模式基于路由表来实现转发,核心就一句话:把每台物理节点变成路由器。

工作原理:

- host-gw 的核心原理就是由 flanneld 守护进程在每台物理节点上配置路由信息,以实现pod 的跨主机通信,每台物理节点都充当容器通信的“网关”。这也正是“host-gw”的含义。

- Flannel 子网和主机的信息保存在 Etcd。flanneld 只需要 WACTH 数据的变化实时更新路由表即可。

- Flannel host-gw 模式必须要求集群宿主机之间在同一个局域网。

要使用 host-gw 模式,需要修改 ConfigMap kube-flannel-cfg ,将 Backend.Type 从vxlan 改为 host-gw,然后重启所有 kube-flannel Pod 即可。

host-gw 模式下的通信过程:

host-gw 模式的优缺点:

- 优点:转发路径少,集群规模大的情况下,转发效率更高。

- 缺点:

- 要求物理网络必须是二层网络。

- 在一些云平台上会限制你对云主机添加路由条目,而无法使用。

1.3. 部署示例

k8s 控制平面配置:

我们需要在 Kubernetes 控制平面开启分配 Pod 地址的设置,包括 kube-controller- anager、kube-proxy 的启动参数 --cluster-cidr=10.244.0.0/16,这个 Pod IP CIDR 地址池应与 Flannel 的配置一致。

安装 Flannel:

在 Kubernetes 集群中,Flannel 以 DaemonSet 模式运行,参考配置文件(kube-

lannel.yaml )内容如下。

# kube-flannel.yaml

---

apiVersion: v1

kind: Namespace

metadata:

labels:

k8s-app: flannel

pod-security.kubernetes.io/enforce: privileged

name: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: flannel

name: flannel

namespace: kube-flannel

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: flannel

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

- apiGroups:

- networking.k8s.io

resources:

- clustercidrs

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: flannel

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

# 在 net-conf.json 中配置 Pod IP CIDR 地址池,设置 Flannel 工作模式

apiVersion: v1

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

kind: ConfigMap

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

name: kube-flannel-cfg

namespace: kube-flannel

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

name: kube-flannel-ds

namespace: kube-flannel

spec:

selector:

matchLabels:

app: flannel

k8s-app: flannel

template:

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

containers:

- args:

- --ip-masq

- --kube-subnet-mgr

command:

- /opt/bin/flanneld

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

image: docker.io/flannel/flannel:v0.23.0

imagePullPolicy: IfNotPresent

name: kube-flannel

resources:

requests:

cpu: 100m

memory: 50Mi

securityContext:

capabilities:

add:

- NET_ADMIN

- NET_RAW

privileged: false

volumeMounts:

- mountPath: /run/flannel

name: run

- mountPath: /etc/kube-flannel/

name: flannel-cfg

- mountPath: /run/xtables.lock

name: xtables-lock

hostNetwork: true

initContainers:

- args:

- -f

- /flannel

- /opt/cni/bin/flannel

command:

- cp

image: docker.io/flannel/flannel-cni-plugin:v1.2.0

imagePullPolicy: IfNotPresent

name: install-cni-plugin

volumeMounts:

- mountPath: /opt/cni/bin

name: cni-plugin

- args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

command:

- cp

image: docker.io/flannel/flannel:v0.23.0

imagePullPolicy: IfNotPresent

name: install-cni

volumeMounts:

- mountPath: /etc/cni/net.d

name: cni

- mountPath: /etc/kube-flannel/

name: flannel-cfg

priorityClassName: system-node-critical

serviceAccountName: flannel

tolerations:

- effect: NoSchedule

operator: Exists

volumes:

- hostPath:

path: /run/flannel

name: run

- hostPath:

path: /opt/cni/bin

name: cni-plugin

- hostPath:

path: /etc/cni/net.d

name: cni

- configMap:

name: kube-flannel-cfg

name: flannel-cfg

- hostPath:

path: /run/xtables.lock

type: FileOrCreate

name: xtables-lock2. Calico

2.1. BGP 模式

2.1.1. 概述

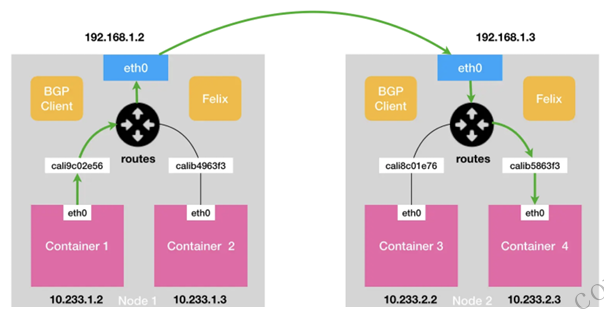

Calico 的 BGP 模式 与 Flannel 的 host-gw 模式几乎一样。也会在宿主机上,添加一个格式如下所示的路由规则:

< 目的容器 IP 地址段 > via < 网关的 IP 地址 > dev eth0

# 网关的 IP 地址是目的容器所在宿主机的 IP 地址。不同于 Flannel 通过 Etcd 和 flanneld 维护路由信息的做法,Calico 使用“BGP”来自动地在整个集群中分发路由信息。

Calico 在每个 Node 上都利用 Linux Kernel 实现了一个高效的 vRouter 来负责数据转发。每个 vRouter 都通过 BGP1 协议把在本 Node 上运行的容器的路由信息向整个 Calico 网络广播,并自动设置到达其他 Node 的路由转发规则。Calico 保证所有容器之间的数据都是通过IP路由的方式完成互联互通的。

2.1.2. BGP 模式的三个主要组成部分

- Calico 的 CNI 插件,这是 Calico 与 K8s 对接的部分。

- Felix:是一个守护程序(在k8s中以DeaemonSe部署),以 agent 形式运行在宿主机上,负责在宿主机上插入路由规则(即:写入 Linux 内核的 FIB 转发信息库),以及维护 Calico 所需的网络设备等工作。

- BIRD。BGP 的客户端,作用是读取 Felix 编写到内核中的路由信息,并将路由信息分发到集群中的其他节点。

除了对路由信息的维护方式之外,Calico 与 Flannel 的 host-gw 模式的另一个不同之处就是它不会在宿主机上创建任何网桥设备。Calico 的工作方式如下:

- Calico 的 CNI 插件会为每个容器设置一个 Veth Pair 设备,把其中的一端放置在宿主机上。

- 由于 Calico 没有使用 CNI 的网桥模式,所以 Calico 的 CNI 插件还需要在宿主机上为每个容器的 VethPair 设备配置一条路由规则,用于接收传入的 IP 包。比如,宿主机 Node 2 上的 Container 4 对应的路由规则,如下所示:

10.233.2.3 dev cali5863f3 scope link。

- 由于 Calico 没有使用 CNI 的网桥模式,所以 Calico 的 CNI 插件还需要在宿主机上为每个容器的 VethPair 设备配置一条路由规则,用于接收传入的 IP 包。比如,宿主机 Node 2 上的 Container 4 对应的路由规则,如下所示:

- 容器发出的 IP 包经过 Veth Pair 出现在宿主机上。宿主机根据路由规则的下一跳 IP地址,把它们转发给正确的网关。

- 最核心的“下一跳”路由规则,就是由 Calico 的 Felix 进程负责维护的。这些路由规则信息,则是通过 BGP Client 也就是 BIRD 组件,使用 BGP 协议传输而来的。

Calico 实际上将集群里的所有节点都当作是边界路由器来处理,一起组成了一个全连通的网络,互相之间通过 BGP 协议交换路由规则。这些节点称为 BGP Peer。

2.1.3. BGP Route Reflector

Calico 维护的网络在默认配置下,是一个被称为“Node-to-Node Mesh/网格”的模式。每台宿主机上的 BGP Client 都需要跟其他所有节点的 BGP Client 通信以交换路由信息。随着节点 N 的增加,连接数量就会以 N² 的规模快速增长,给集群网络带来巨大的压力。在更大规模的集群中,用到的是 Route Reflector 的模式。

在这种模式下,Calico 会指定一个或者几个专门的节点负责跟所有节点建立 BGP 连接从而学习到全局的路由规则。而其他节点只需跟这几个节点交换路由信息,就可以获得整个集群的路由规则信息了。

2.2. IPIP 隧道模式

Calico 的 BGP 模式和 Flannel 的 host-gw 一样要求二层网络是可达的,但是在实际的部署中集群的网络通常划分在不同的网段中,即物理层面只能是三层连通的,在这种情况下就需要为 Calico 打开 IPIP 模式。

在 IPIP 模式下,Calico 会使用 tunl0 设备,tunl0 是一个 IP 隧道设备,IP包会被

tunl0 设备接管。tunl0 设备会将 IP 包封装为宿主机 IP 包。这样新的IP包就可以通过宿主机路由器发送了。

与 vxlan 类似,本质就是协议封装,底层的物理网络可以是二层也可以是三层,效率比 vxlan 略高,但是维护成本也高,所有了解即可。

2.3. 部署示例

在 Kubernetes 中部署 Calico,主要包括 calico-node 和 calico policy controller。需要创建的资源对象和配置信息如下:

- ConfigMap calico-config:包含 Calico 所需的配置参数。

- 在每个 Node 上都运行 calico/node 容器,部署为 DaemonSet。

- 在每个 Node 上都安装 Calico CNI 二进制文件并设置网络配置参数(由 install-cni 容器完成)。

- 部署一个名为“calico/kube-policy-controller”的 Deployment,以对接Kubernetes 集群中为 Pod 设置的 NetworkPolicy。

官网下载 yaml 文件:

curl https://raw.githubusercontent.com/projectcalico/calico/v3.30.3/manifests/calico.yaml -O主要配置介绍如下:

- ConfigMap 配置

# Source: calico/templates/calico-config.yaml

# This ConfigMap is used to configure a self-hosted Calico installation.

kind: ConfigMap

apiVersion: v1

metadata:

name: calico-config

namespace: kube-system

data:

# Typha is disabled.

# 这是一个明确的信号,表示此集群部署中不包含 Typha 服务。因此,Calico 的组件将配置为绕过 Typha,直接与 etcd(或 Kubernetes API Server,如果使用 Kubernetes 作为数据存储)进行通信。

# Typha 是 Calico 项目中的一个轻量级代理。它的主要目的是减少后端数据存储的连接数。

typha_service_name: "none"

# Configure the backend to use.

calico_backend: "bird"

# Configure the MTU to use for workload interfaces and tunnels.

# By default, MTU is auto-detected, and explicitly setting this field should not be required.

# You can override auto-detection by providing a non-zero value.

veth_mtu: "0"

# The CNI network configuration to install on each node. The special

# values in this config will be automatically populated.

cni_network_config: |-

{

"name": "k8s-pod-network",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "calico",

"log_level": "info",

"log_file_path": "/var/log/calico/cni/cni.log",

"datastore_type": "kubernetes",

"nodename": "__KUBERNETES_NODE_NAME__",

"mtu": __CNI_MTU__,

"ipam": {

"type": "calico-ipam"

},

"policy": {

"type": "k8s"

},

"kubernetes": {

"kubeconfig": "__KUBECONFIG_FILEPATH__"

}

},

{

"type": "portmap",

"snat": true,

"capabilities": {"portMappings": true}

}

]

}

- calico-node

# Source: calico/templates/calico-node.yaml

# This manifest installs the calico-node container, as well

# as the CNI plugins and network config on

# each master and worker node in a Kubernetes cluster.

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: calico-node

namespace: kube-system

labels:

k8s-app: calico-node

spec:

selector:

matchLabels:

k8s-app: calico-node

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

k8s-app: calico-node

spec:

nodeSelector:

kubernetes.io/os: linux

hostNetwork: true

tolerations:

# Make sure calico-node gets scheduled on all nodes.

- effect: NoSchedule

operator: Exists

# Mark the pod as a critical add-on for rescheduling.

- key: CriticalAddonsOnly

operator: Exists

- effect: NoExecute

operator: Exists

serviceAccountName: calico-node

securityContext:

seccompProfile:

type: RuntimeDefault

# Minimize downtime during a rolling upgrade or deletion; tell Kubernetes to do a "force

# deletion": https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods.

terminationGracePeriodSeconds: 0

priorityClassName: system-node-critical

initContainers:

# This container performs upgrade from host-local IPAM to calico-ipam.

# It can be deleted if this is a fresh installation, or if you have already

# upgraded to use calico-ipam.

- name: upgrade-ipam

image: docker.io/calico/cni:v3.30.3

imagePullPolicy: IfNotPresent

command: ["/opt/cni/bin/calico-ipam", "-upgrade"]

envFrom:

- configMapRef:

# Allow KUBERNETES_SERVICE_HOST and KUBERNETES_SERVICE_PORT to be overridden for eBPF mode.

name: kubernetes-services-endpoint

optional: true

env:

- name: KUBERNETES_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: CALICO_NETWORKING_BACKEND

valueFrom:

configMapKeyRef:

name: calico-config

key: calico_backend

volumeMounts:

- mountPath: /var/lib/cni/networks

name: host-local-net-dir

- mountPath: /host/opt/cni/bin

name: cni-bin-dir

securityContext:

privileged: true

# This container installs the CNI binaries

# and CNI network config file on each node.

- name: install-cni

image: docker.io/calico/cni:v3.30.3

imagePullPolicy: IfNotPresent

command: ["/opt/cni/bin/install"]

envFrom:

- configMapRef:

# Allow KUBERNETES_SERVICE_HOST and KUBERNETES_SERVICE_PORT to be overridden for eBPF mode.

name: kubernetes-services-endpoint

optional: true

env:

# Name of the CNI config file to create.

- name: CNI_CONF_NAME

value: "10-calico.conflist"

# The CNI network config to install on each node.

- name: CNI_NETWORK_CONFIG

valueFrom:

configMapKeyRef:

name: calico-config

key: cni_network_config

# Set the hostname based on the k8s node name.

- name: KUBERNETES_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# CNI MTU Config variable

- name: CNI_MTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# Prevents the container from sleeping forever.

- name: SLEEP

value: "false"

volumeMounts:

- mountPath: /host/opt/cni/bin

name: cni-bin-dir

- mountPath: /host/etc/cni/net.d

name: cni-net-dir

securityContext:

privileged: true

# This init container mounts the necessary filesystems needed by the BPF data plane

# i.e. bpf at /sys/fs/bpf and cgroup2 at /run/calico/cgroup. Calico-node initialisation is executed

# in best effort fashion, i.e. no failure for errors, to not disrupt pod creation in iptable mode.

- name: "mount-bpffs"

image: docker.io/calico/node:v3.30.3

imagePullPolicy: IfNotPresent

command: ["calico-node", "-init", "-best-effort"]

volumeMounts:

- mountPath: /sys/fs

name: sys-fs

# Bidirectional is required to ensure that the new mount we make at /sys/fs/bpf propagates to the host

# so that it outlives the init container.

mountPropagation: Bidirectional

- mountPath: /var/run/calico

name: var-run-calico

# Bidirectional is required to ensure that the new mount we make at /run/calico/cgroup propagates to the host

# so that it outlives the init container.

mountPropagation: Bidirectional

# Mount /proc/ from host which usually is an init program at /nodeproc. It's needed by mountns binary,

# executed by calico-node, to mount root cgroup2 fs at /run/calico/cgroup to attach CTLB programs correctly.

- mountPath: /nodeproc

name: nodeproc

readOnly: true

securityContext:

privileged: true

containers:

# Runs calico-node container on each Kubernetes node. This

# container programs network policy and routes on each

# host.

- name: calico-node

image: docker.io/calico/node:v3.30.3

imagePullPolicy: IfNotPresent

envFrom:

- configMapRef:

# Allow KUBERNETES_SERVICE_HOST and KUBERNETES_SERVICE_PORT to be overridden for eBPF mode.

name: kubernetes-services-endpoint

optional: true

env:

# Use Kubernetes API as the backing datastore.

- name: DATASTORE_TYPE

value: "kubernetes"

# Wait for the datastore.

- name: WAIT_FOR_DATASTORE

value: "true"

# Set based on the k8s node name.

- name: NODENAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# Choose the backend to use.

- name: CALICO_NETWORKING_BACKEND

valueFrom:

configMapKeyRef:

name: calico-config

key: calico_backend

# Cluster type to identify the deployment type

- name: CLUSTER_TYPE

value: "k8s,bgp"

# Auto-detect the BGP IP address.

- name: IP

value: "autodetect"

# 修改为 disEnable IPIP

- name: CALICO_IPV4POOL_IPIP

value: "Never"

# Enable or Disable VXLAN on the default IP pool.

- name: CALICO_IPV4POOL_VXLAN

value: "Never"

# Enable or Disable VXLAN on the default IPv6 IP pool.

- name: CALICO_IPV6POOL_VXLAN

value: "Never"

# Set MTU for tunnel device used if ipip is enabled

- name: FELIX_IPINIPMTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# Set MTU for the VXLAN tunnel device.

- name: FELIX_VXLANMTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# Set MTU for the Wireguard tunnel device.

- name: FELIX_WIREGUARDMTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# The default IPv4 pool to create on startup if none exists. Pod IPs will be

# chosen from this range. Changing this value after installation will have

# no effect. This should fall within `--cluster-cidr`.

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

# Disable file logging so `kubectl logs` works.

- name: CALICO_DISABLE_FILE_LOGGING

value: "true"

# Set Felix endpoint to host default action to ACCEPT.

- name: FELIX_DEFAULTENDPOINTTOHOSTACTION

value: "ACCEPT"

# Disable IPv6 on Kubernetes.

- name: FELIX_IPV6SUPPORT

value: "false"

- name: FELIX_HEALTHENABLED

value: "true"

securityContext:

privileged: true

resources:

requests:

cpu: 250m

lifecycle:

preStop:

exec:

command:

- /bin/calico-node

- -shutdown

livenessProbe:

exec:

command:

- /bin/calico-node

- -felix-live

- -bird-live

periodSeconds: 10

initialDelaySeconds: 10

failureThreshold: 6

timeoutSeconds: 10

readinessProbe:

exec:

command:

- /bin/calico-node

- -felix-ready

- -bird-ready

periodSeconds: 10

timeoutSeconds: 10

volumeMounts:

# For maintaining CNI plugin API credentials.

- mountPath: /host/etc/cni/net.d

name: cni-net-dir

readOnly: false

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- mountPath: /run/xtables.lock

name: xtables-lock

readOnly: false

- mountPath: /var/run/calico

name: var-run-calico

readOnly: false

- mountPath: /var/lib/calico

name: var-lib-calico

readOnly: false

- name: policysync

mountPath: /var/run/nodeagent

# For eBPF mode, we need to be able to mount the BPF filesystem at /sys/fs/bpf so we mount in the

# parent directory.

- name: bpffs

mountPath: /sys/fs/bpf

- name: cni-log-dir

mountPath: /var/log/calico/cni

readOnly: true

volumes:

# Used by calico-node.

- name: lib-modules

hostPath:

path: /lib/modules

- name: var-run-calico

hostPath:

path: /var/run/calico

type: DirectoryOrCreate

- name: var-lib-calico

hostPath:

path: /var/lib/calico

type: DirectoryOrCreate

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

- name: sys-fs

hostPath:

path: /sys/fs/

type: DirectoryOrCreate

- name: bpffs

hostPath:

path: /sys/fs/bpf

type: Directory

# mount /proc at /nodeproc to be used by mount-bpffs initContainer to mount root cgroup2 fs.

- name: nodeproc

hostPath:

path: /proc

# Used to install CNI.

- name: cni-bin-dir

hostPath:

path: /opt/cni/bin

type: DirectoryOrCreate

- name: cni-net-dir

hostPath:

path: /etc/cni/net.d

# Used to access CNI logs.

- name: cni-log-dir

hostPath:

path: /var/log/calico/cni

# Mount in the directory for host-local IPAM allocations. This is

# used when upgrading from host-local to calico-ipam, and can be removed

# if not using the upgrade-ipam init container.

- name: host-local-net-dir

hostPath:

path: /var/lib/cni/networks

# Used to create per-pod Unix Domain Sockets

- name: policysync

hostPath:

type: DirectoryOrCreate

path: /var/run/nodeagent

- calico-policy-controller

# Source: calico/templates/calico-kube-controllers.yaml

# See https://github.com/projectcalico/kube-controllers

apiVersion: apps/v1

kind: Deployment

metadata:

name: calico-kube-controllers

namespace: kube-system

labels:

k8s-app: calico-kube-controllers

spec:

# The controllers can only have a single active instance.

replicas: 1

selector:

matchLabels:

k8s-app: calico-kube-controllers

strategy:

type: Recreate

template:

metadata:

name: calico-kube-controllers

namespace: kube-system

labels:

k8s-app: calico-kube-controllers

spec:

nodeSelector:

kubernetes.io/os: linux

tolerations:

# Mark the pod as a critical add-on for rescheduling.

- key: CriticalAddonsOnly

operator: Exists

- key: node-role.kubernetes.io/master

effect: NoSchedule

- key: node-role.kubernetes.io/control-plane

effect: NoSchedule

serviceAccountName: calico-kube-controllers

securityContext:

seccompProfile:

type: RuntimeDefault

priorityClassName: system-cluster-critical

containers:

- name: calico-kube-controllers

image: docker.io/calico/kube-controllers:v3.30.3

imagePullPolicy: IfNotPresent

env:

# Choose which controllers to run.

- name: ENABLED_CONTROLLERS

value: node,loadbalancer

- name: DATASTORE_TYPE

value: kubernetes

livenessProbe:

exec:

command:

- /usr/bin/check-status

- -l

periodSeconds: 10

initialDelaySeconds: 10

failureThreshold: 6

timeoutSeconds: 10

readinessProbe:

exec:

command:

- /usr/bin/check-status

- -r

periodSeconds: 10

securityContext:

runAsNonRoot: true

2.4. 部署过程问题记录

Containerd 运行时配置了代理,但代理无法将流量转发到集群 IP。创建 Pod 时报错:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 86s default-scheduler Successfully assigned kube-system/calico-kube-controllers-5c7545f596-lrx25 to k8s-master-01

Warning FailedCreatePodSandBox 67s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "9842e0a5f676046bb23fd6dfd96118d228ed3fd0e6c807fa2c57784f71c5cef5": plugin type="calico" failed (add): error getting ClusterInformation: Get "https://10.96.0.1:443/apis/crd.projectcalico.org/v1/clusterinformations/default": net/http: TLS handshake timeout

Warning FailedCreatePodSandBox 46s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "77722de246e94cc8fef8ff4861f3c377549042702dccfeed5e1e12b03a895484": plugin type="calico" failed (add): error getting ClusterInformation: Get "https://10.96.0.1:443/apis/crd.projectcalico.org/v1/clusterinformations/default": net/http: TLS handshake timeout

Warning FailedCreatePodSandBox 25s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "1ba87a3bd73285cd51a9bcc3ea643ac4406477ed3c9a399d89330c4ee1d42370": plugin type="calico" failed (add): error getting ClusterInformation: Get "https://10.96.0.1:443/apis/crd.projectcalico.org/v1/clusterinformations/default": net/http: TLS handshake timeout

Warning FailedCreatePodSandBox 4s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "f21b8420c7ef18e0d1406bb71f88d6f6f3000cc72167d69a7d25420b177b48d1": plugin type="calico" failed (add): error getting ClusterInformation: Get "https://10.96.0.1:443/apis/crd.projectcalico.org/v1/clusterinformations/default": net/http: TLS handshake timeout

Normal SandboxChanged 3s (x4 over 66s) kubelet Pod sandbox changed, it will be killed and re-created.

解决方案:移除代理或者设置 Pod IP 绕过代理。

# /etc/systemd/system/containerd.service.d/proxy.conf

[Service]

Environment="HTTP_PROXY=http://hypervisor-01:20172"

Environment="HTTPS_PROXY=http://hypervisor-01:20172"

# 排除内网地址

Environment="NO_PROXY=10.0.0.0/8,192.168.0.0/16,172.16.0.0/12,127.0.0.1,localhost,.svc,.cluster.local"