1. Jenkins 相应凭证创建

Jenkins 要完成整个流程需要多个凭证,其中在指定的 k8s 集群创建工作节点所需的凭证和在 GitLab 拉取代码的凭证前面已经创建好了。下面还需要创建对接镜像仓库的凭证和部署到不同环境的 k8s 集群的凭证。

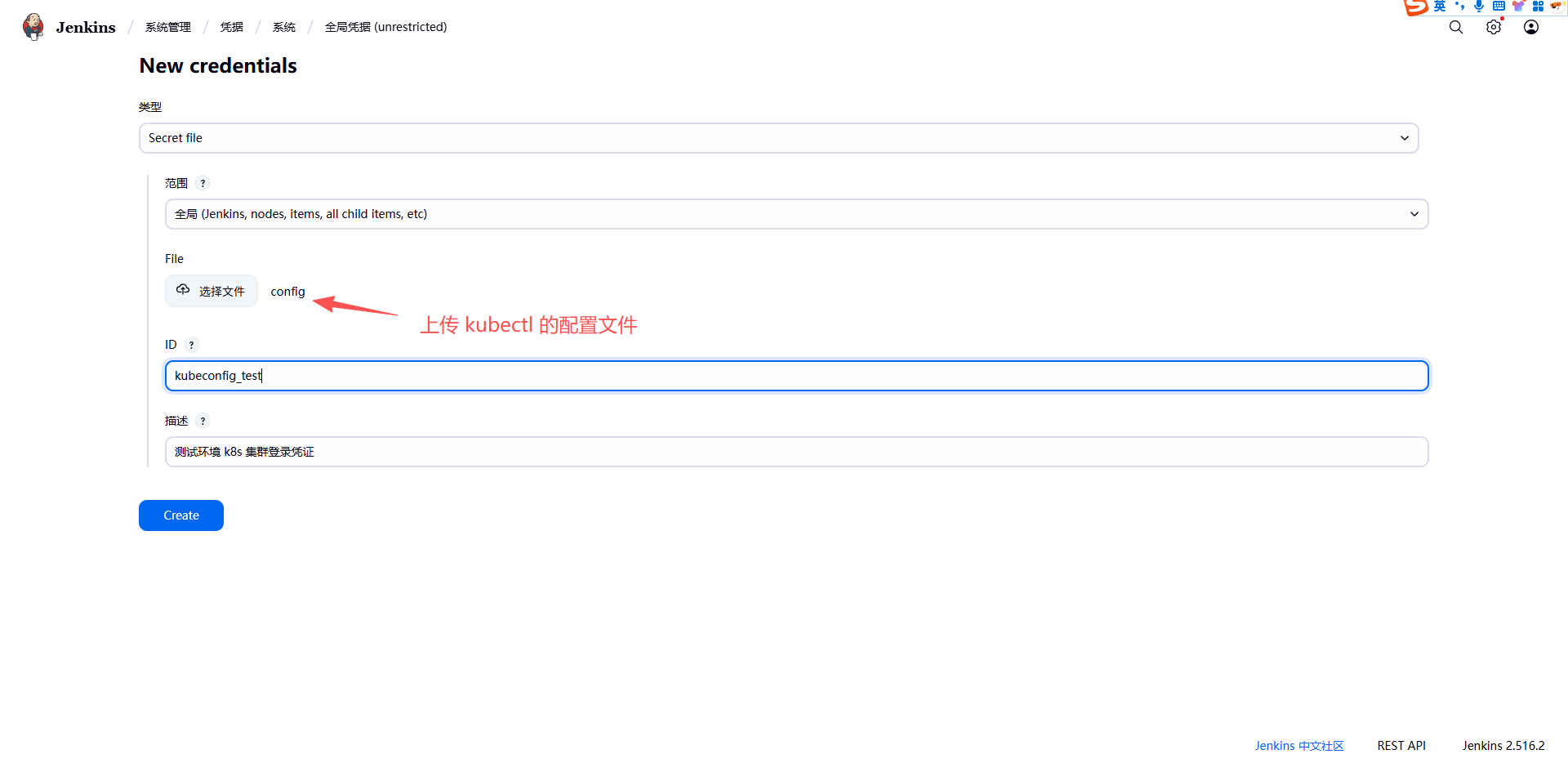

创建对接测试环境的凭证:

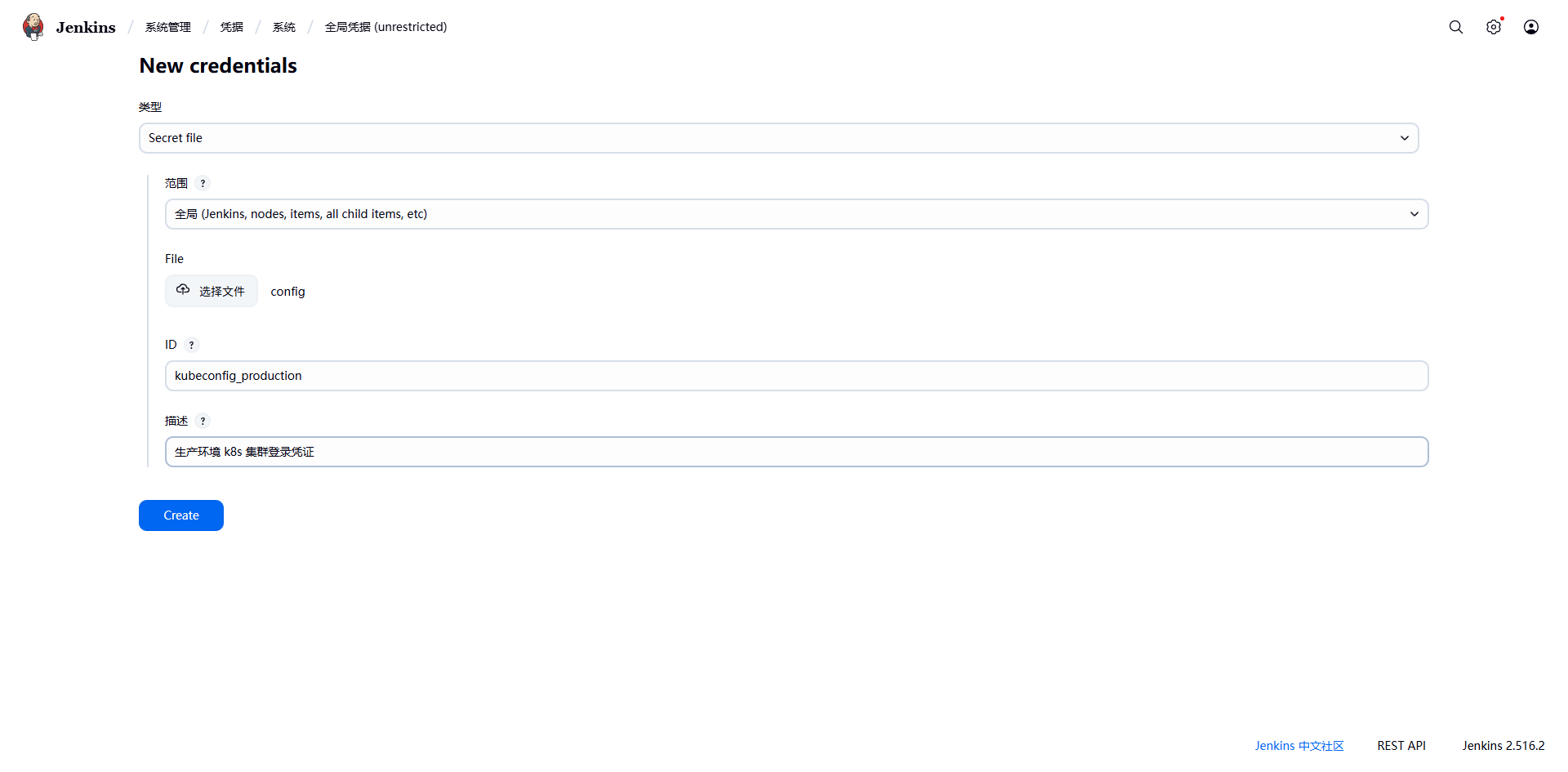

创建对接生产环境的凭证:

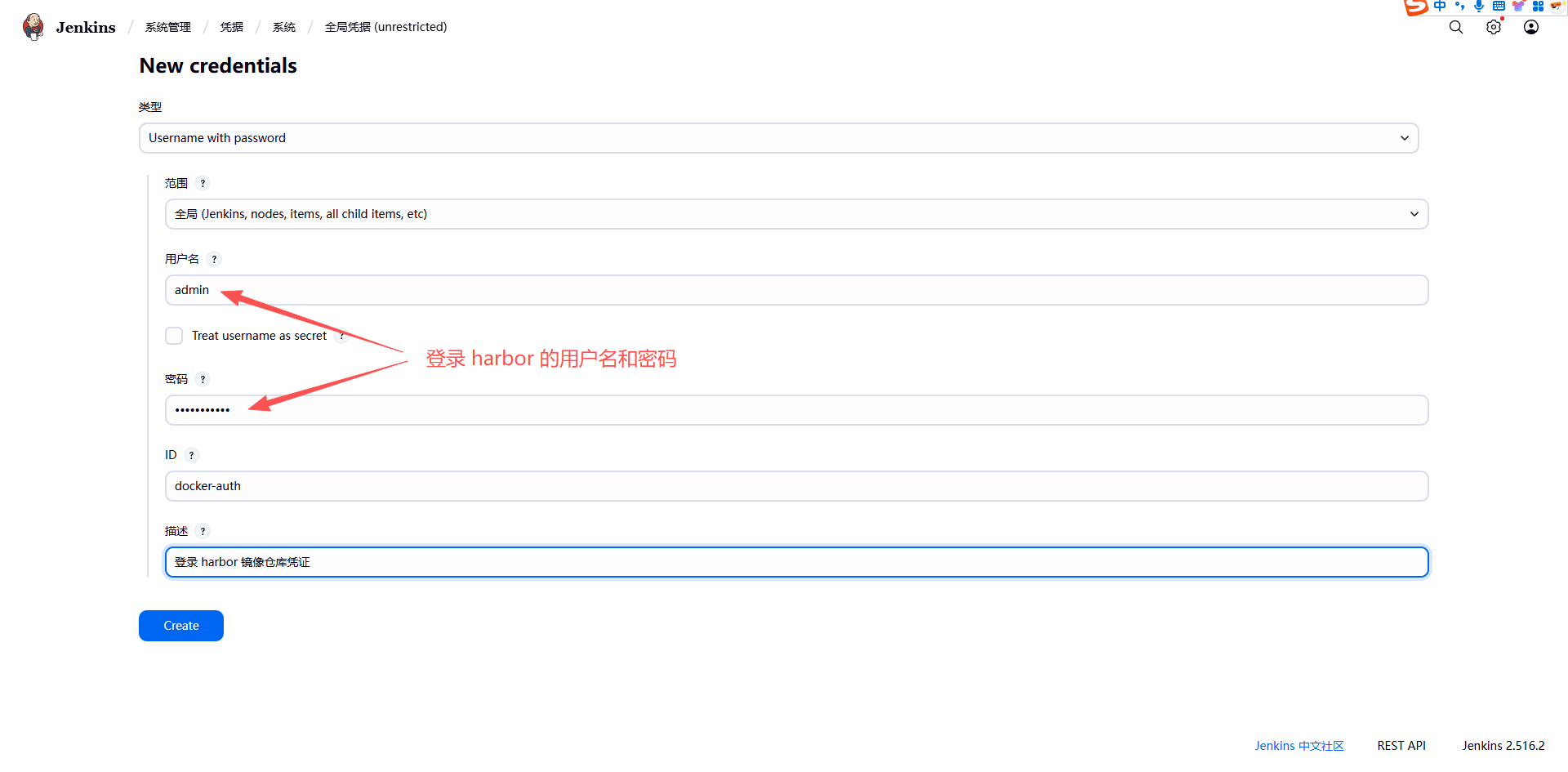

创建 Jenkins 对接镜像仓库的凭证:

这里因为只有一个 k8s 集群,所以上传的 config 都是同一个文件,在实际情况有多个集群时,就使用不同的 config。

注意:此处的镜像仓库凭证只用于 Jenkins slave pod 推送镜像,将构建好的镜像部署到其他集群时,相应的集群名称空间下应该单独创建一个 secret 来获取镜像仓库凭证。同样,用于 Jenkins slave pod 的凭证也可以在 k8s 中以 secret 形式创建。

2. 创建获取镜像的 secret

在部署镜像的集群(如开发、测试环境)同样创建一个 secret 供部署时在镜像仓库拉取镜像,这里的实验没有多个集群,就在同一个集群内的不同名称空间来实验。

# 测试环境

kubectl create namespace test

kubectl create secret docker-registry regcred \

--docker-server=192.168.2.151:30002 \

--docker-username=admin \

--docker-password=Harbor12345 \

-n test

# 生产环境

kubectl create namespace production

kubectl create secret docker-registry regcred \

--docker-server=192.168.2.151:30002 \

--docker-username=admin \

--docker-password=Harbor12345 \

-n production3. 编写 go 程序代码示例

创建 go.mod 文件:

// go.mod

module golang

go 1.21创建 run.go 文件,编写 go 代码:

// run.go

package main

import (

"fmt"

"time"

)

func main() {

fmt.Println("主分支....")

time.Sleep(1000000 * time.Second)

}4. 创建 Jenkinsfile 文件

// 定义pod slave的标签

def label = "slave-${UUID.randomUUID().toString()}"

// 定义动态生成的 slave pod 的模板,该pod启动时包含了3个容器名为golang、kaniko、kubectl

podTemplate(

label: label,

containers: [

containerTemplate(

name: 'golang',

image: 'golang:1.21',

command: 'cat',

ttyEnabled: true

),

containerTemplate(

name: 'kaniko',

image: 'gcr.io/kaniko-project/executor:debug',

command: 'cat',

ttyEnabled: true

),

containerTemplate(

name: 'kubectl',

image: 'cnych/kubectl',

command: 'cat',

ttyEnabled: true

)

],

serviceAccount: 'jenkins',

namespace: 'cicd-tool' // 明确指定命名空间

) {

node(label) {

def myRepo = checkout scm

// 获取开发任意git commit -m "xxx"指定的提交信息xxx

def gitCommit = myRepo.GIT_COMMIT

// 获取提交的分支

def gitBranch = myRepo.GIT_BRANCH

echo "------------>本次构建的分支是:${gitBranch}"

// 仓库地址

def registryUrl = "192.168.2.151:30002"

def imageEndpoint = "online/test"

// 获取 git commit id 作为我们后面制作的docker镜像的tag

def imageTag = sh(script: "git rev-parse --short HEAD", returnStdout: true).trim()

// 镜像

def image = "${registryUrl}/${imageEndpoint}:${imageTag}"

stage('单元测试') {

echo "1.测试阶段,此步骤略,可以根据需求自己定制"

}

stage('代码编译打包') {

try {

container('golang') {

echo "2.代码编译打包阶段"

sh """

export GOPROXY=https://goproxy.cn

GOOS=linux GOARCH=amd64 go build -buildvcs=false -v -o main

"""

// 验证 Dockerfile 存在

sh "ls -la Dockerfile || echo 'Dockerfile not found'"

}

} catch (exc) {

println "构建失败 - ${currentBuild.fullDisplayName}"

throw(exc)

}

}

stage('构建 Docker 镜像') {

container('kaniko') {

echo "3. 使用 Kaniko 构建 Docker 镜像阶段"

withCredentials([usernamePassword(

credentialsId: 'docker-auth',

usernameVariable: 'USERNAME',

passwordVariable: 'PASSWORD'

)]) {

// 创建 Docker 认证配置文件,明确指定使用 HTTP

sh '''

mkdir -p /kaniko/.docker

cat > /kaniko/.docker/config.json << EOF

{

"auths": {

"192.168.2.151:30002": {

"auth": "$(echo -n "\${USERNAME}:\${PASSWORD}" | base64 | tr -d '\n')"

}

}

}

EOF

'''

// 添加 registry 到不安全注册表列表

sh """

echo '{"insecure-registries":["192.168.2.151:30002"]}' > /kaniko/.docker/daemon.json

"""

// 验证配置文件

sh '''

echo "=== 验证配置文件 ==="

echo "Config.json 内容:"

cat /kaniko/.docker/config.json

echo ""

echo "Daemon.json 内容:"

cat /kaniko/.docker/daemon.json

echo ""

echo "生成的认证令牌:"

echo -n "\${USERNAME}:\${PASSWORD}" | base64

'''

// 验证项目文件结构

sh "ls -la"

// 使用 --insecure 和 --skip-tls-verify 参数

sh "/kaniko/executor --context=\$(pwd) --dockerfile=Dockerfile --destination=${image} --insecure --skip-tls-verify --verbosity=debug"

}

}

}

stage('运行 Kubectl') {

container('kubectl') {

script {

// 注意:如果前面上传到 Jenkins 的对接 k8s 集群的 config 文件中 serve 字段使用的是域名(例如 server: https://api-server:8443),这需要在 kubectl 中添加一个解析。

sh "echo '192.168.2.150 api-server' >> /etc/hosts"

// 标准化分支名称比较

def normalizedBranch = gitBranch.replace('origin/', '')

if (normalizedBranch == 'main') {

withCredentials([file(credentialsId: 'kubeconfig_production', variable: 'KUBECONFIG')]) {

echo "查看生产 K8S 集群 Pod 列表"

sh 'mkdir -p ~/.kube && /bin/cp "${KUBECONFIG}" ~/.kube/config'

sh "kubectl get pods -n production"

// 添加交互代码,确认是否要部署到生产环境

def userInput = input(

id: 'userInput',

message: '是否确认部署到生产环境?',

parameters: [

choice(

choices: "Y\nN",

description: '选择是否部署到生产环境',

name: 'CONFIRM_DEPLOY'

)

]

)

if (userInput == "Y") {

// 部署到生产环境

sh "kubectl -n production set image deployment/test-deployment test-container=${image}"

echo "已部署到生产环境"

} else {

// 任务结束

echo "取消本次任务"

currentBuild.result = 'ABORTED'

error('用户取消了部署到生产环境')

}

}

} else if (normalizedBranch == 'develop') {

withCredentials([file(credentialsId: 'kubeconfig_test', variable: 'KUBECONFIG')]) {

echo "查看测试 K8S 集群 Pod 列表"

sh 'mkdir -p ~/.kube && /bin/cp "${KUBECONFIG}" ~/.kube/config'

sh "kubectl get pods -n test"

sh "kubectl -n test set image deployment/test-deployment test-container=${image}"

echo "已部署到测试环境"

}

} else {

echo "分支 ${gitBranch} 不是 main 或 develop,跳过部署步骤"

}

}

}

}

}

}

5. 创建 Dockerfile 文件

FROM alpine:latest

WORKDIR /app

COPY main .

RUN chmod +x main

EXPOSE 8080

USER nobody:nobody

CMD ["./main"]6. 创建部署配置

由前面的 Jenkinsfile 中的 deployToKubernetes 函数知道我们需要事先在集群内定义好资源配置,自动部署直接更换镜像来更新应用。所以现在集群内部署下面两个 Deployment

生产环境:

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deployment

namespace: production

labels:

app: test

spec:

replicas: 2

selector:

matchLabels:

app: test

template:

metadata:

labels:

app: test

spec:

containers:

- name: test-container

image: busybox:latest # 随便定义一个后面自动部署时会更换

command: ["sh", "-c", "echo '等待镜像更新...'; sleep 3600"] # 保持容器运行

ports:

- containerPort: 8080

imagePullSecrets:

- name: regcred测试环境:

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deployment

namespace: test

labels:

app: test

spec:

replicas: 2

selector:

matchLabels:

app: test

template:

metadata:

labels:

app: test

spec:

containers:

- name: test-container

image: busybox:latest # 随便定义一个后面自动部署时会更换

command: ["sh", "-c", "echo '等待镜像更新...'; sleep 3600"] # 保持容器运行

ports:

- containerPort: 8080

imagePullSecrets:

- name: regcred7. 提交代码测试

# 切换到项目目录

cd test/

ll

total 32

-rw-r--r-- 1 admin admin 108 Sep 15 16:29 Dockerfile

-rw-r--r-- 1 admin admin 11856 Sep 15 16:29 Jenkinsfile

-rw-r--r-- 1 admin admin 6163 Sep 14 17:08 README.md

-rw-r--r-- 1 admin admin 23 Sep 15 15:59 go.mod

-rw-r--r-- 1 admin admin 134 Sep 15 16:00 run.go

# 提交代码

git add .

git commit -m "main v1.0"

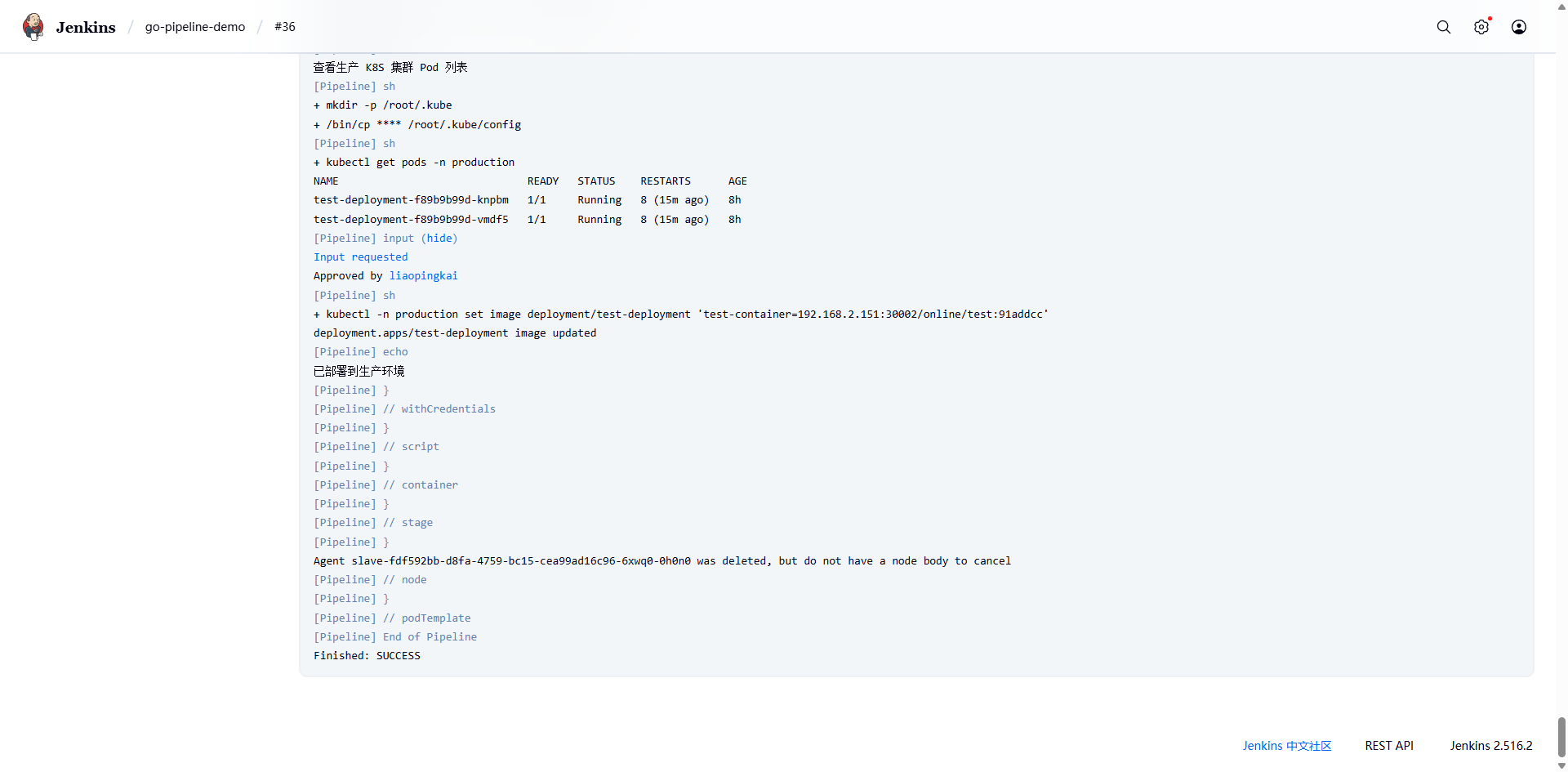

git push origin main可以看到构建成功:

查看 Pod:

# kubectl get -n production pod test-deployment-79db945998-k8b8z -o yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

cni.projectcalico.org/containerID: 5e806a932304d1fef618baa922ebf784683da7152c5fcaa290feb977884838e7

cni.projectcalico.org/podIP: 10.244.44.227/32

cni.projectcalico.org/podIPs: 10.244.44.227/32

creationTimestamp: "2025-09-15T16:56:38Z"

generateName: test-deployment-79db945998-

labels:

app: test

pod-template-hash: 79db945998

name: test-deployment-79db945998-k8b8z

namespace: production

ownerReferences:

- apiVersion: apps/v1

blockOwnerDeletion: true

controller: true

kind: ReplicaSet

name: test-deployment-79db945998

uid: 7bb45d00-a6aa-4dad-a1f2-e79a32689da4

resourceVersion: "4742852"

uid: 0ba20b2b-0842-4c0c-8d00-c2d2dc3df0a8

spec:

containers:

- command:

- sh

- -c

- echo '等待镜像更新...'; sleep 3600

image: 192.168.2.151:30002/online/test:91addcc # 镜像已经更新

imagePullPolicy: IfNotPresent

name: test-container

ports:

- containerPort: 8080

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-v7hlv

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

imagePullSecrets:

- name: regcred

nodeName: k8s-node-02

preemptionPolicy: PreemptLowerPriority

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- name: kube-api-access-v7hlv

projected:

defaultMode: 420

sources:

- serviceAccountToken:

expirationSeconds: 3607

path: token

- configMap:

items:

- key: ca.crt

path: ca.crt

name: kube-root-ca.crt

- downwardAPI:

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

path: namespace

status:

conditions:

- lastProbeTime: null

lastTransitionTime: "2025-09-15T16:56:40Z"

status: "True"

type: PodReadyToStartContainers

- lastProbeTime: null

lastTransitionTime: "2025-09-15T16:56:38Z"

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: "2025-09-16T07:56:53Z"

status: "True"

type: Ready

- lastProbeTime: null

lastTransitionTime: "2025-09-16T07:56:53Z"

status: "True"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: "2025-09-15T16:56:38Z"

status: "True"

type: PodScheduled

containerStatuses:

- containerID: containerd://0a86af44e4ed291d2d7719e7109ea936269b93fa42a3fe0e0dfa98feda77fe9d

image: 192.168.2.151:30002/online/test:91addcc

imageID: 192.168.2.151:30002/online/test@sha256:2601d693e89a69f23ff77b8dee7bbe0ecbcf68165d331ddb344bf6df804994d8

lastState:

terminated:

containerID: containerd://dfc6d284925cf8b49c4382a538a1f3931e63e548251011d5dda900dfc7c7ebb4

exitCode: 0

finishedAt: "2025-09-16T07:56:52Z"

reason: Completed

startedAt: "2025-09-16T06:56:52Z"

name: test-container

ready: true

restartCount: 15

started: true

state:

running:

startedAt: "2025-09-16T07:56:53Z"

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-v7hlv

readOnly: true

recursiveReadOnly: Disabled

hostIP: 192.168.2.155

hostIPs:

- ip: 192.168.2.155

phase: Running

podIP: 10.244.44.227

podIPs:

- ip: 10.244.44.227

qosClass: BestEffort

startTime: "2025-09-15T16:56:38Z"切换分支测试:

cd test/

# 创建新分支并立即切换到它

git checkout -b develop

# 提交代码

git add .

git commit -m "测试"

git push origin develop